Screenshot of Hatebase's map of “recent sightings” of hatespeech around the world, based on crowd-sourced entries uploaded to the database.

Is there any way to predict a genocide? What happens first – before the worst?

According to an article published by Genocide Watch, it is a process with several stages which need to be identified and addressed at each stage. Language-based classification, or symbolization, is one of these necessary steps.

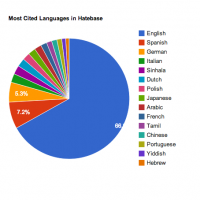

Screenshot of graph of most uploaded terms by languages into Hatebase.

Hatebase is a crowdsourced databse of hatespeech created around this idea of identification and prevention. Through the database, individuals can upload reports of “sightings” and vocabulary, indicating where in the world the terms have been used. Founder Timothy Quinn said through an email interview that “the key to understanding Hatebase is to see it as a layer of data — like traffic on top of a city map.”

The database was built to assist government agencies, NGOs, research organizations and others to identify the prevalence hate speech as a predictor for regional violence.

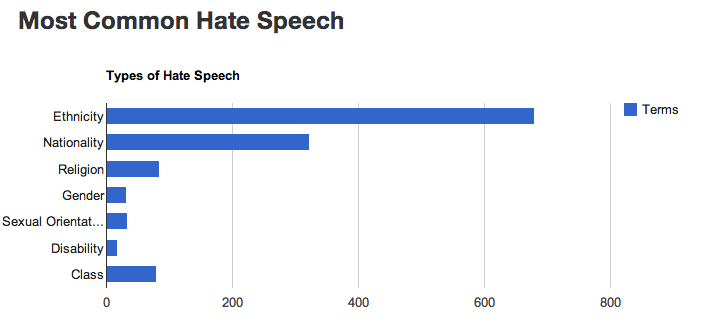

The database asks users to contribute “sightings” according to location and language, tagged by topic of ethnicity, nationality, gender, sexual orientation, and so on. At this point there are 60,000 timestamped, geotagged sightings in the system.

In order to allow other organisations to access the database for use in other applications, the developers have spent a lot of time building an open API. Quinn:

Our interest is in having NGOs like us integrate it with their own data to help them make informed decisions about where to devote human, material and financial resources… Our hope is that more people will eventually be using the API to understand our dataset than the website.

Defining Terms

But what exactly is “hate speech”? From the website:

Hate speech is difficult to quantify, but most people would agree with Justice Potter Stewart's famous sentiment: “I know it when I see it.” Hatebase defines hate speech as any term which broadly categorizes a specific group of people based on malignant, qualitative and/or subjective attributes — particularly if those attributes pertain to ethnicity, nationality, religion, sexuality, disability or class.

Hatebase suggests to contributors to think about the following questions before adding a term to the database:

1. Does it refer to a specific group of people or is it a generalized insult? If the latter, it's probably not hate speech.

2. Can it potentially be used with malicious intent? If not, it's probably not hate speech.

3. Are there objective third-party sources online which can be used as citations? If not, it's probably not hate speech.

4. If you were to write a program which monitors hate speech on Twitter, would finding it in a random tweet be potentially meaningful? If not, it's probably not hate speech.

For more information, read about their work on The Sentinel Project and follow them on twitter @hatebase_org.